Mobile Audio: Challenges and Solutions

This text is a translation of an article originally published on GameDev DOU.

Mobile games’ technical and aesthetic requirements are constantly rising to bring them closer to the level of their console and PC counterparts. Sound design requirements are no exception, but phones have many technical limitations, such as the size of the default build, volume, simultaneous playback of sounds, and CPU load. An incorrectly built sound system can negatively affect a mobile game’s store rating as any crashes are taken into account by the algorithm and thus determine the project’s future. Diagnosing these problems and reworking an already realized project costs a company much more than adopting a systematic approach to sound creation from the very beginning of development.

My name is Illia Gogoliev, and I’m an Audio Producer at Plarium. For over 10 years I’ve been working on sound design for mobile games, cinematics, TV, and online advertising. Among the most well-known Plarium projects we develop and support together with the Audio Team are RAID: Shadow Legends and Mech Arena. In this article, I want to talk about how to optimize audio content for a mobile game in a way that doesn’t harm either the project or the players. This article will be useful both for sound designers working in teams or on outsourcing, and for indie developers who work on sound on their own.

Why you should optimize sound for mobile games

A phone is not a games console. It is not made for gaming like a PlayStation or an Xbox. As developers, we have to adapt to a wide range of devices, not just one specific mobile device. Games that are not optimized for phones cause a negative gaming experience and, as a result, the loss of players.

Why do players leave?

- A game takes too long to load

- A game is too big

- A game slows down or crashes

- The sound and visuals are not synchronized

- The sound is too quiet or too loud (which is annoying and affects gameplay)

There are probably more reasons, but I’ll be focusing on those where sound optimization can affect the final result.

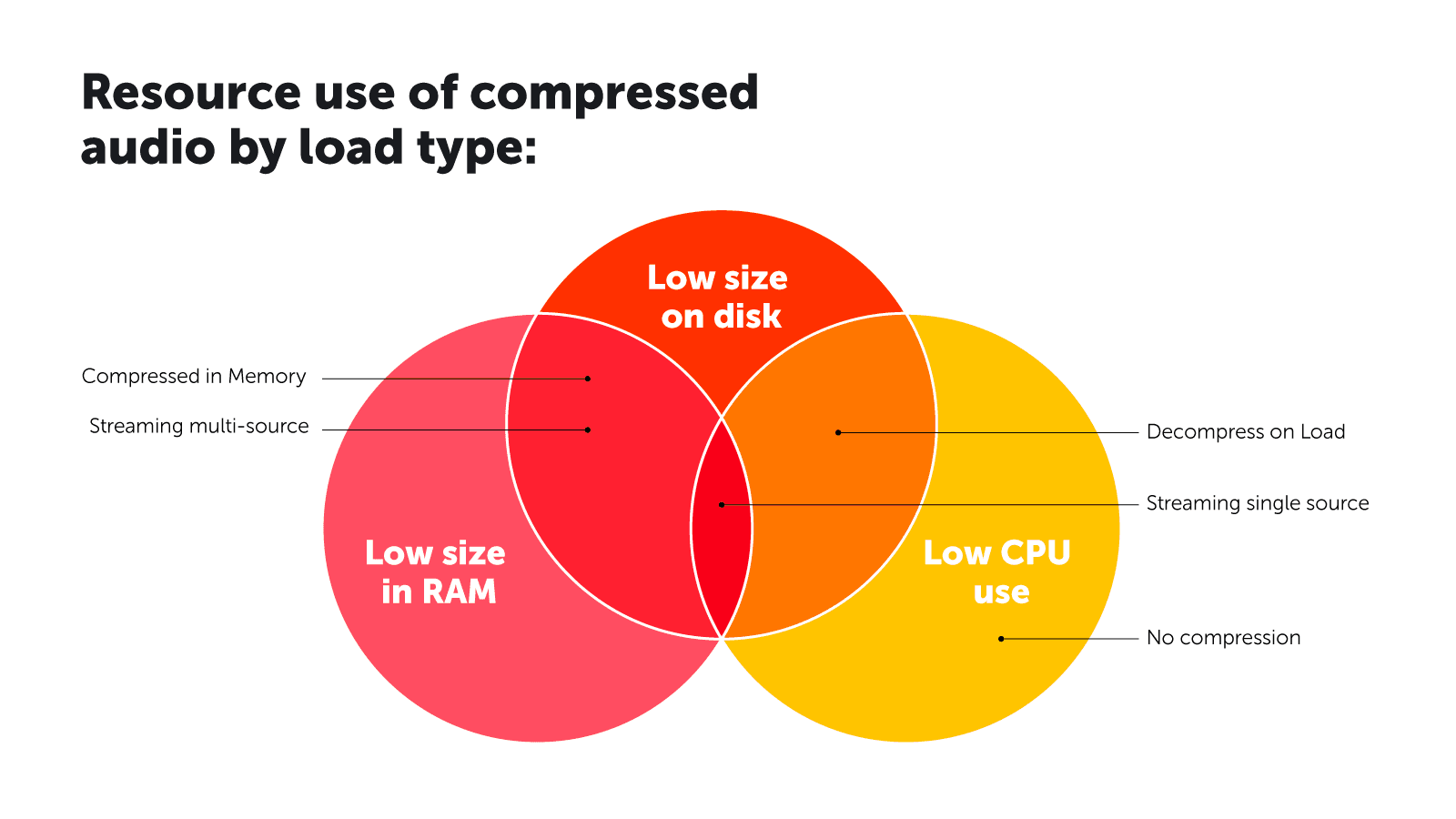

Optimization is a constant balancing act of available resources, such as RAM, disk space, and CPU use.

Source: an article on Unity Audio Import Optimisation by designer Zander Hulme

This diagram is relevant for anyone who has to optimize a mobile game – both technical artists and developers.

Challenge: limiting a build size

The limit put on the size of default builds by the App Store and Google Play has greatly influenced the model of mobile game production and development. Currently, in both stores the limit is 200 MB. In this article about mobile app sizes and optimization strategies for the App Store, marketer Jonathan Fishman gives the following research findings:

**Apps that exceed 200MB can experience a 15–25% decrease in conversion rates due to a message warning users about the app’s size. **

I would add that, when it comes to tens or hundreds of thousands of players, even a much lower failure rate can be a reason for a developer to optimize a game and adjust its build size to 200 MB or less.

Solutions

Start profiling resources in the game as early as possible. You can do this in Unity even if you don’t use middleware. You need to understand the number of features you are about to release in advance, predict how much space you’ll need for the default build’s sound, and capture this information.

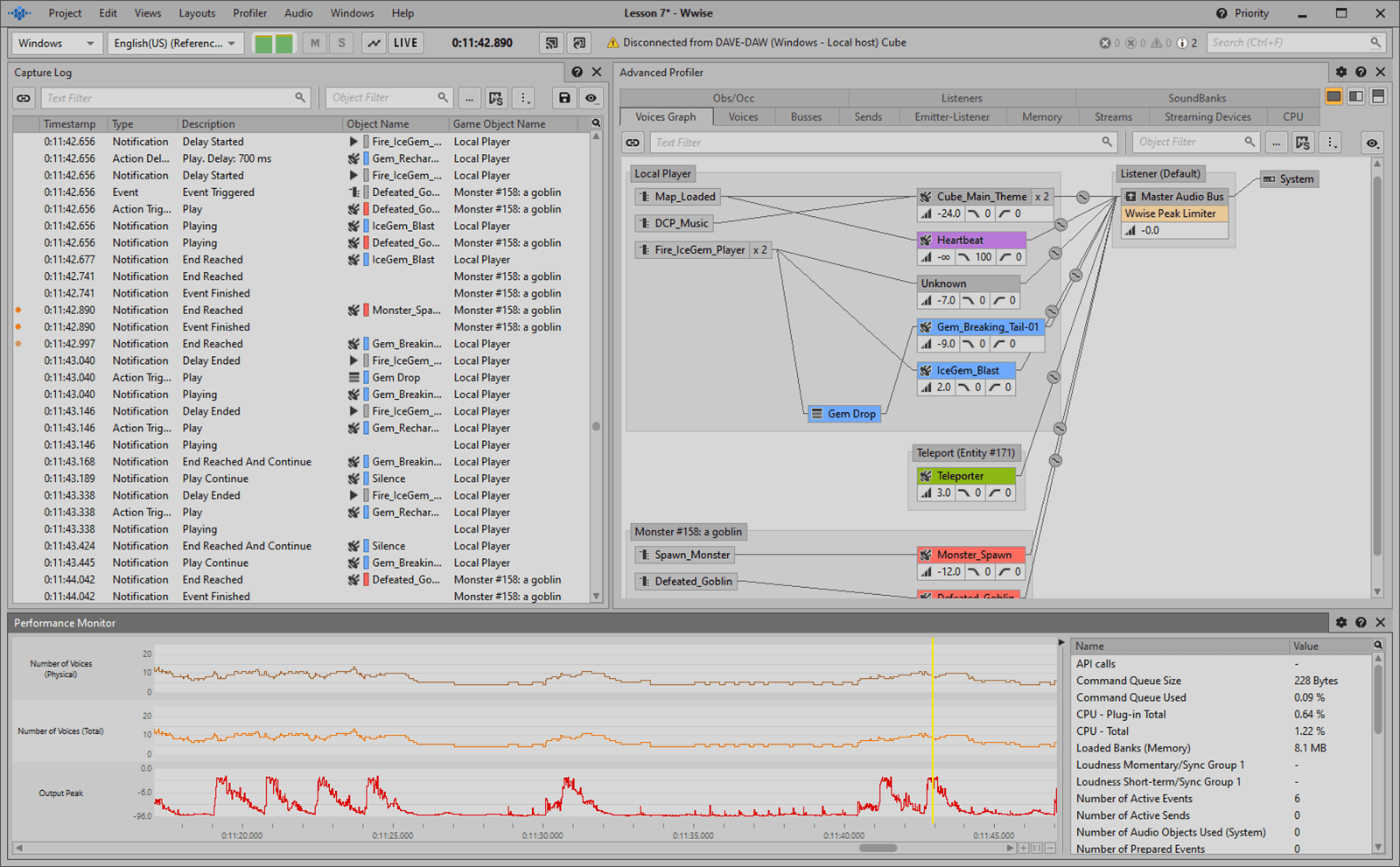

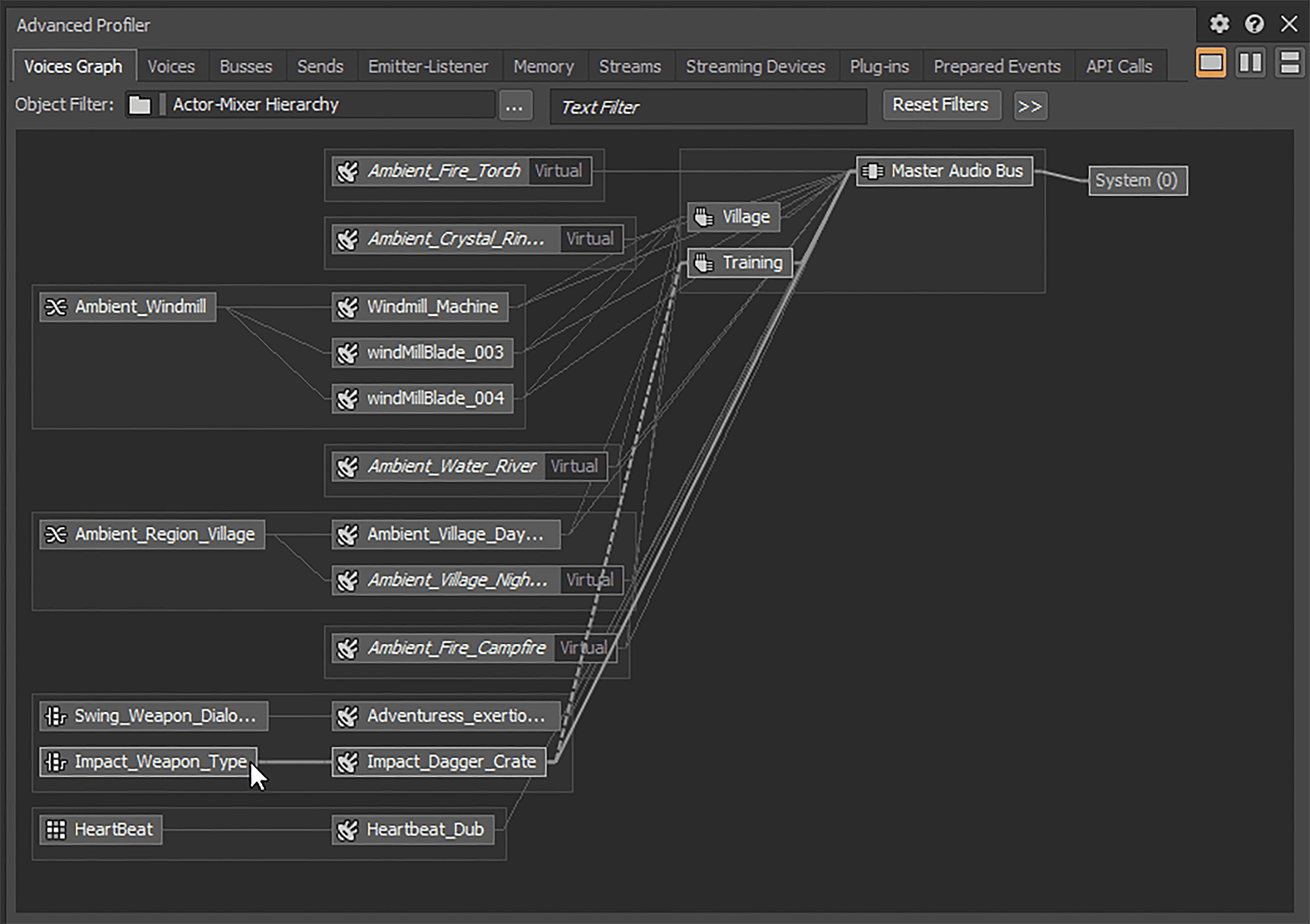

The hierarchy of all objects that relate to sound in a game at a particular moment in the Audiokinetic Wwise profiler. Here we can see how the volume and weight of resources affect the capabilities of the device that’s playing the game (source: audiokinetic.com)

From our experience, the audio assets will have to be allocated at least 10% of the total size of the default build.

These are rather strict requirements for file compression, and we will consider them further. As for additional content, this is uploaded later through asset bundles.

Build an audio content hierarchy as early as possible. How will you divide this small amount of space in the build for different audio content? The proportions may depend, for example, on the genre. Sometimes you need to have more ambient sounds, other times more interface sounds, music, voices, etc. The sooner you organize your resources, the easier it will be for you to develop a game and predict new features’ sizes. Established technical boundaries will increase the creativity of your solutions as you’ll need to adapt to certain limitations.

Challenge: resource compression

You can optimize resource size by:

- Sample rate

- Format and quality

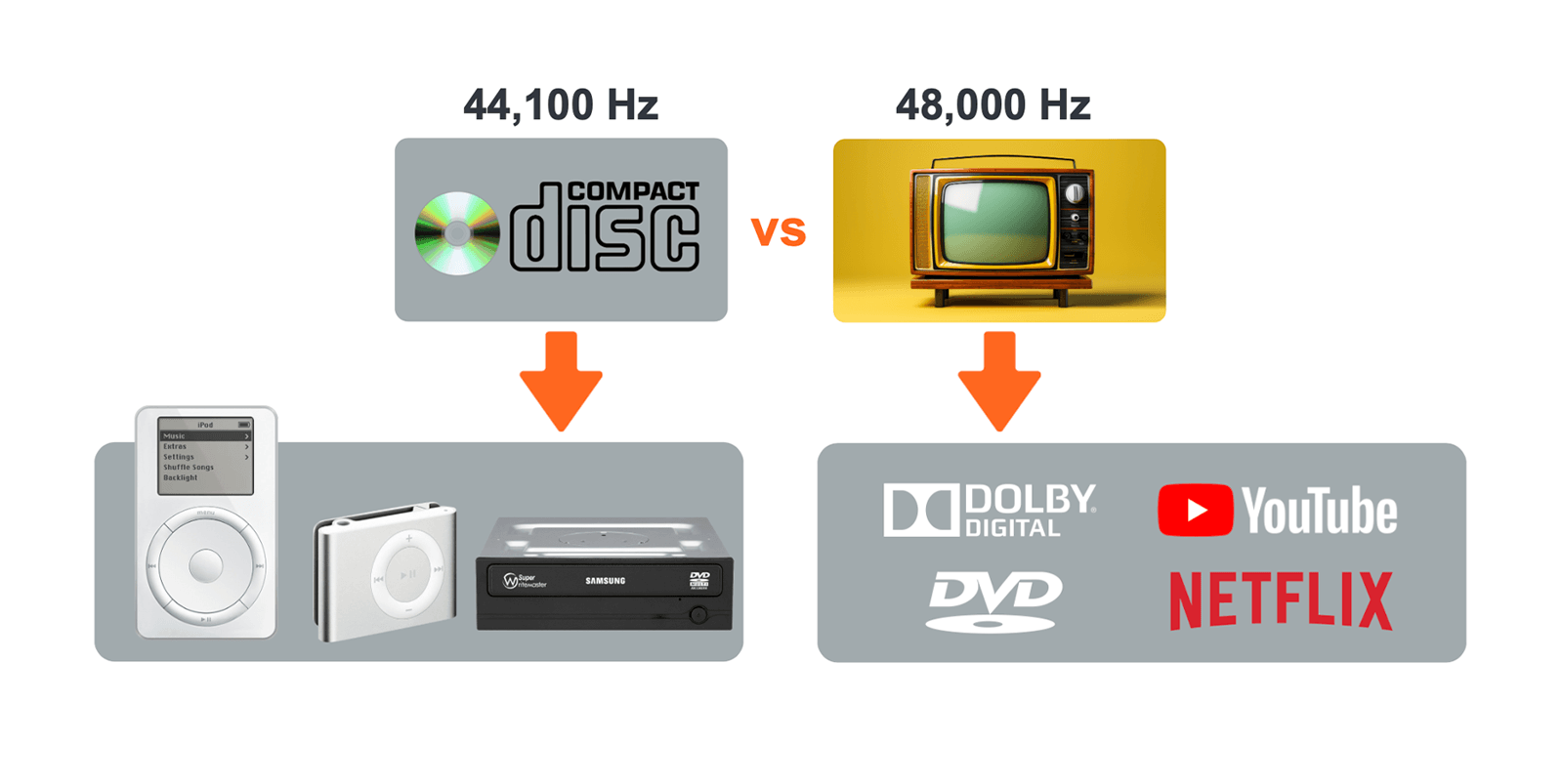

I won’t go too deep into the history – you just need to understand how phones work with sample rates. The two most popular types of media sample rate – 44,100 Hz and 48,000 Hz – have long existed in parallel, but come from different sources:

The 44,100 Hz format was used for CDs, while 48,000 Hz was used for movies and cable TV. Both formats had a huge impact on further device and service development. As the successors to MP3 players, smartphones have long operated at 44,100 Hz. Since the release of the iPhone 6S, Netflix, YouTube, other streaming services, and large video games have emerged and overtaken phones with their 48,000 Hz content.

When a phone operates on its native frequency and certain apps force it to play files in another frequency, the device should quickly convert the file frequency – although you won’t notice it by ear. Depending on whether the frequency is lowered or increased to the working frequency, this process is called downsampling or upsampling respectively. Even though you saved space on the device by reducing the frequency of assets, you overloaded the CPU. This is why phones have largely switched to 48,000 Hz – it allows them to work directly in their native frequency.

Solutions

It is recommended that you leave all content at 48,000 Hz, as advised by Google Developers, because it’s the frequency that the vast majority of phones run at. However, this is only possible in a perfect world as compression is likely to be necessary.

If you want to save space and compress the quality of your assets due to the sample rate, start with 24,000 Hz or 16,000 Hz. Multiplying the frequency by two or three is the simplest mathematical operation for a phone that will perform resource upsampling. The first candidates for extreme compression are voice overs as well as ambient and other sounds where the noise component prevails over the tonal one – but listen carefully to the result to detect artifacts.

Is it possible to avoid having to use these solutions? Yes, if you have a good understanding of how your game will perform and can see in the profiler that converting to a different frequency and using bigger formats does not affect the process. Also: remember to keep your creative idea in mind, because these solutions don’t mean you have to compress everything the way Google suggests.

The most commonly used formats for compressing audio assets

- ADPCM compresses assets linearly. Your file size is reduced by three times. The unpacking process is very fast and does not use CPU resources. This format is perfect for UI when, for example, you need a high response speed to a button press.

- Vorbis allows you to maximize file compression but uses more CPU resources for decompression. To determine if the result you hear is up to scratch, you have to listen carefully to see if the sound has time to decompress. A quality scale is available in this tool, but it’s important to understand the nuance: the quality parameter determines not the quality of the file you get after compression but the quality of the compression tool. For example, Unity defines it as a scale from 1 to 100, and Wwise defines it as a logarithmic formula. That is why quality can be either 0 or –1.

Listen to this voice over example from a recent RAID: Shadow Legends release:

Example: Derived file: 48 kHz PCM WAV – 1 MB Sample rate compressed file: 24 kHz PCM WAV – 500 kB Quality compressed file with music added (as it will be heard): OGG Vorbis (~q. 0.4) – 100 kB

If you ignore the Google Developers tips mentioned earlier, you could compress your file even more. It is possible to compress a file down to 20 kHz or to make it lower quality in Vorbis – but, firstly, you can only do this until you hear the artifacts. Secondly, consider the priority of the audio content and how important it is to you.

Challenge: RAM priority system

Phones have a priority system for all operations. A lot of memory is reserved for processes that phones consider to be more important than your game – for example emergency notifications, calls, health apps, etc. Moreover, users almost always use several applications at the same time – for example they can watch Netflix, download files, and use instant messengers simultaneously.

Conclusion: you will never be able to use the amount of memory specified for a phone in a game.

So you need to fit into some limits, otherwise a phone will just try to crash everything lower in priority.

How do you figure out how much memory a game uses for sound? Sum up the size of all the soundbanks in a scene – this will be the amount of memory usage. Everything you need in a scene or a particular feature remains, and everything you don’t need is very strictly limited, for example the sounds of a menu, a cinematic, or a previous scene that has already been unloaded from memory.

Why this matters:

- Using more memory than necessary = the FPS decreases and turns the game into a “slideshow”

- Using excessive memory resources (for example, if you do not control the loading and unloading of soundbanks) = crashing when you go beyond memory limits, which makes players irritated, loses their progress, and causes them to leave

Solutions

Identify the target device and run thorough tests on it.

Start profiling as early as possible and predict how much memory is used for each scene and feature.

Make a lot of small soundbanks – it is better than one big soundbank. At first, it may seem difficult to manage, but in the future this approach will allow you to avoid using unnecessary resources in a game.

Build a flexible system of soundbank triggers. Control the conditions under which soundbanks are loaded or unloaded from memory. Preferably, this should be done manually. A good practice is to use middleware such as Audiokinetic Wwise. Unlike Unity Audio, where everything is a bit “under the hood”, in Wwise you control the loading of literally every sound and its life cycle during a game.

Use streaming. Not Spotify, but a type of disk-based resource packaging that allows you to decompress files in a game without using RAM. You can stream music, ambient sounds, and voice overs. But don’t overuse it: on the one hand, you use less memory, but on the other hand, you load the CPU.

Challenge: the limited number of physical voices

A mobile phone is limited in the simultaneous streams of sound playback. These are called “physical voices” and they are quite strictly limited. This article on how to get a hold on your voices suggests a limit of 50 to 100 voices. In our team’s experience, that’s a very optimistic estimate.

We consider the risk zone to be in the range of 20 to 30 simultaneous voices. It’s the easiest way to catch audio crashes on both Android and iOS.

Imagine you are creating a match-3 game with several objects in the scene. For example, it’s a pineapple that explodes. You attach one sound to one asset, but on the next level, you have 15 pineapples. You need to limit the volume and the number of objects. In addition to the fact that the sound of 15 pineapples is too loud, it’s also a load on the CPU.

Solutions

Set priorities. You have to build a hierarchy of sound importance. Determine which sounds are the most important and should never be sacrificed, even if you exceed the voice limit. And vice versa – which assets have a lower priority and can be dropped in the case of reaching the critical limit zone?

For example, we can add a condition: no matter how many pineapples explode in the scene, the player will hear no more than three voices of this type. A player only needs to understand that several pineapples exploded, and it is important for the phone not to overload the volume and the number of identical processes.

The hierarchy of sounds in the Audiokinetic Wwise profile looks like a queue of live events that “make their way” to the listener

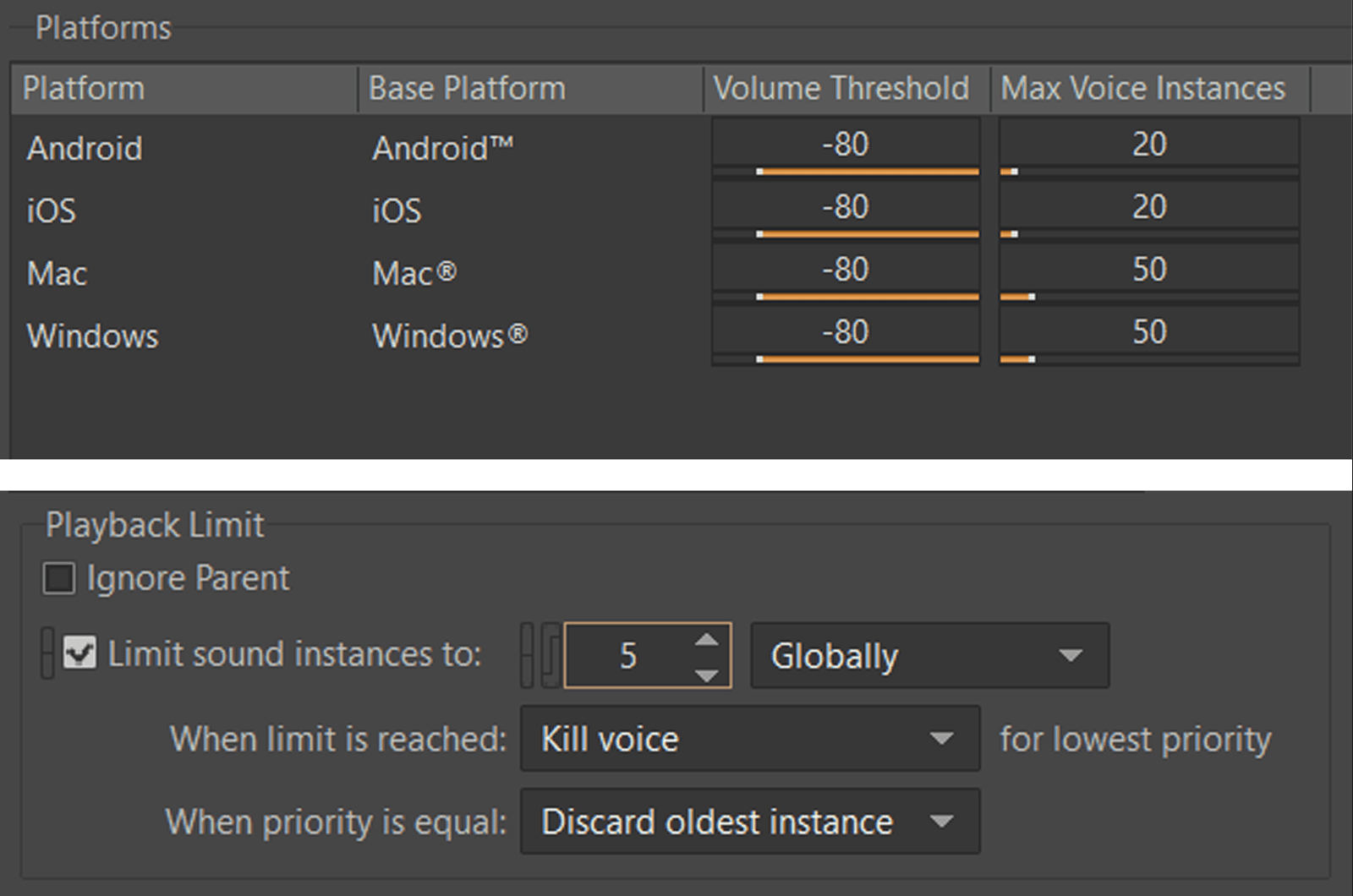

Set voice limits. Limits can be either global (for a particular platform) or for an individual asset. In the second instance you choose how your engine will get rid of unnecessary resources, for example by stopping old resources or not allowing new ones to play until the physical voice gets through.

Setting up limits by platform and per asset

Test everything on your target devices.

Analyze analytics. Collecting logs of game sessions is very important because only massive data sets help to understand what problems the project has and how serious they are. These include memory usage statistics, data on file upload speed, crash statistics, and more. If you do not use analytics, stores will do it for you. If they determine that players are leaving because the game crashes or performs poorly, the game will be lowered in the search results accordingly.

Challenge: limited dynamic range

Compared with desktop computers, games consoles, and TVs, phones are severely limited in dynamic range. For us as listeners, this means that the difference between the quietest and loudest sounds will be smaller. The volume gradation will also be smaller and less detailed.

Developers have to make everything a little louder and more compressed for mobile phones than for desktop computers or consoles.

Solutions

Follow the Sony (–18 LUFS) and Google Developers (–16 LUFS) recommendations for the mix level. However, this is general advice. Unlike the PlayStation or Xbox, neither the App Store nor Google Play has a loudness certification. If you do not meet the proposed standards, there will be no problems with validation.

Create two mixes, one for headphones and one for speakers, with dynamic state changes. Even Unity has a built-in tool for this – Mixer Snapshots. In Wwise, you can create a separate state. Create a template with separate settings for the state when players use speakers, and add a trigger to change the state when they connect or disconnect headphones.

Measure the game session mixes to get at least a rough idea of how far you are from the suggested standards. Record videos of real gaming sessions, preferably for at least 30 to 40 minutes, and measure them with a free plugin such as Youlean Loudness Meter. Doing so will help you understand how loud or quiet your game is.

Conclusion

All the technical obstacles I’ve described above shouldn’t ruin your game from a creative point of view. If you’re sure that the result is in line with the creative concept, deviating from the standards is acceptable.

Also remember there are always communities of like-minded people where you can get advice. Professional communities are needed for sharing experiences, learning, and networking. One of these groups is Noise Shelter, a Ukrainian community of audio designers on Discord that currently unites more than 100 professionals.

You may also find my other article “Game audio: an extensive text about working with sound in games” [only available in Ukrainian] useful. This article is mainly aimed at beginners and those interested in the profession and tools.